Mimicking a database index

Have you ever wondered how database indices are implemented? Let's dive into it.

Database indices are kind of magical. You apply an index to a specific column and all the queries using that column perform miraculously faster!

Have you ever wondered how they are implemented?

This post is not going to dive into any real database internals (though you might like the references), but I hope to give you a brief idea of how you could mimic what a database would do.

Although Kotlin is used for the code samples, it should apply to most programming languages without a big redesign.

The naïve store

Let's start with a simple object store:

data class User(val email: String)

object store {

private val users = mutableListOf<User>()

fun add(user: User) {

users.add(user)

}

fun find(email: String): User? {

for (user in users) {

if (user.email == email) {

return user

}

}

return null

}

}It will hold a list of users, and given an email, it will loop over the list and return the user we want, or a null value when not found:

fun main(args: Array<String>) {

val user = User("john@doe")

store.add(user)

val found = store.find(user.email) ?: "not found"

println("user is ${found}") // user is john@doe

val notFound = store.find("not@found") ?: "not found"

println("user is ${notFound}") // user is not found

}Measuring the time to find a user

In order to compare the time taken, let's implement a helper function:

fun timeTaken(fn: () -> Unit): Long {

val before = Calendar.getInstance().timeInMillis

fn()

val after = Calendar.getInstance().timeInMillis

return after - before

}Sidenote: the time taken in milliseconds is not a metric to measure time complexity, because it will heavily depend on the hardware and the technology. The Big O Notation is going to be used instead to discuss the time complexity, but it’s easier when it comes to visibility, to spit the time taken in milliseconds on the screen, so I’m sticking to it as a benchmarking tool only.

To search for a user using the current implementation takes linear time (O(n)):

fun main(args: Array<String>) {

for (i in 0 until 30_000_000) {

val user = User(email="someone@${i}")

store.add(user)

}

val milliseconds = timeTaken {

val notFound = store.find("not@found")?.email ?: "not found"

println("user ${notFound}")

// user not found

}

println("time taken = ${milliseconds}")

// time taken = 363

}Iterating over 30.000.000 items is no joke! Now imagine a database table with trillions of records.

Using the binary search algorithm

Applying the binary search algorithm to the find method would improve it by reducing the time complexity to O(log n). Out of the 30.000.000 records, only 7 iterations would be needed till the record is found since the list is halved on every iteration. It's quite an improvement!

However, we are inserting the records in order in the store, and we can't rely on the list of records to be always ordered. That would invalidate the usage of the binary search over the list, and changing the add method to insert items in order would make it perform in linear time, and that sounds not a good idea.

But what if, rather than the list, we had a data structure where we could insert and look for items in a much more efficient manner?

Here comes the clever idea of using a self-balancing binary search tree for the indices.

An index as a self-balancing binary search tree

In order to have an efficient index for lookups, we need a data structure to store our values, in a way that we can apply the binary search efficiently.

A tree is perfect for that, but if we use a simple binary tree, and insert the items in order, the tree will be unbalanced, because only the leaves to the right will be created. So, a requirement is that it should be a balanced binary tree.

Implementing a balanced binary search tree is an interesting exercise, but it will not be addressed in this post. The java.util package has an implementation called TreeMap, and we'll take advantage of it. Under the hood, it's an implementation of a Red-Black Tree, which is a kind of self-balancing binary search tree, and it guarantees insertions, deletes, and lookups in O(log n) worst case.

Let's dive into it:

Let's add an index for the user email in the store;

Every time a user is added, we insert the email in the index, along with the position from the list;

When searching for the user, we replace the linear for-loop to use the b-tree, getting the position to retrieve the user from the list;

import java.util.TreeMap

data class User(val email: String)

object store {

private val users = mutableListOf<User>()

private val emailIndex = TreeMap<String, Int>()

fun add(user: User) {

users.add(user)

emailIndex.put(user.email, users.size-1)

}

fun find(email: String): User? {

val idx = emailIndex.get(email) ?: -1

return if (idx > -1) users.get(idx)

else null

}

}After re-running the same test, now that we have an index in place, we'll be able to see how much of an impact:

fun main(args: Array<String>) {

for (i in 0 until 30_000_000) {

val user = User(email="someone@${i}")

store.add(user)

}

val milliseconds = timeTaken {

val notFound = store.find("not@found")?.email ?: "not found"

println("user ${notFound}")

// user not found

}

println("time taken = ${milliseconds}")

// time taken = 22

}It's down from 363 to 22 milliseconds!

The more we increase the number of records, the more we'll see the discrepancy in time.

Why not a hashmap?

You might be wondering, why not using then a hashmap?

If we want to keep all the records in memory, that's a good idea. It would allow us to get rid of the list of users and the tree for the index in favor of the hashmap only, resulting in a constant insert and lookup (O(1)). Bingo!

Not so fast cowboy...

If you're trying to follow along, writing down the code yourself and running it, you should have bumped into the OutOfMemory error. It can be easily solved by increasing the JVM memory limit with the parameter -Xmx4096m (where 4096m is the amount of memory in MB).

Thus, considering the hashmap needs to be loaded into memory at full, is it worth using it rather than the b-tree?

Let's talk about trade-offs

As with almost anything in software engineering, whether we should use a hashmap or a b-tree as an index, it depends.

Having enough memory to hold all the data makes the hashmap a lot more appealing, but if the data does not fit in memory, and storing it on disk space is an option, the b-tree could be stored on the file system in smaller chunks that can be loaded on-demand.

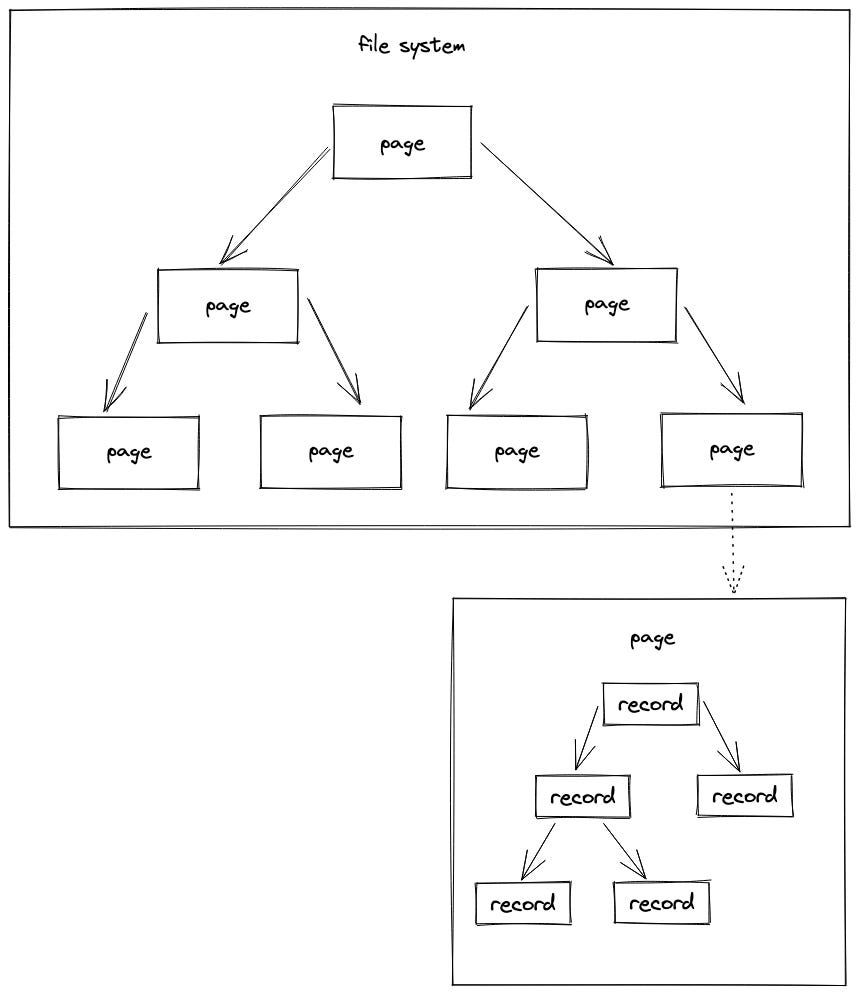

Blocks, Pages & Records

In databases, there’s usually a page abstraction, on top of the file system, which is a data structure that contains a set of records. How many records a page contains depends on the size of the records and the block size on the file system.

Pages tend to be about as big as the block size of the file system. This way data is stored on the file system following the block size, avoiding as many empty gaps per block as possible.

A nested b-tree of pages and records could be leveraged to load data on-demand, while still keeping the O(log n) complexity. As seen in the simplified diagram below:

Concluding

We covered a lot of ground above and it is rather long already. Let's continue talking about databases in future posts.

I hope this text has been didactic. Please leave your questions and comments and don't forget to check the references below. If you made it thus far, you're going to enjoy reading them as much as I did.